NVIDIA GPU

LayerOps supports deploying services that require NVIDIA GPU capabilities. This feature allows you to run GPU-intensive services like Ollama or other AI/ML workloads that benefit from GPU acceleration.

Setting up GPU-enabled Instances

There are two ways to set up GPU-enabled instances in LayerOps:

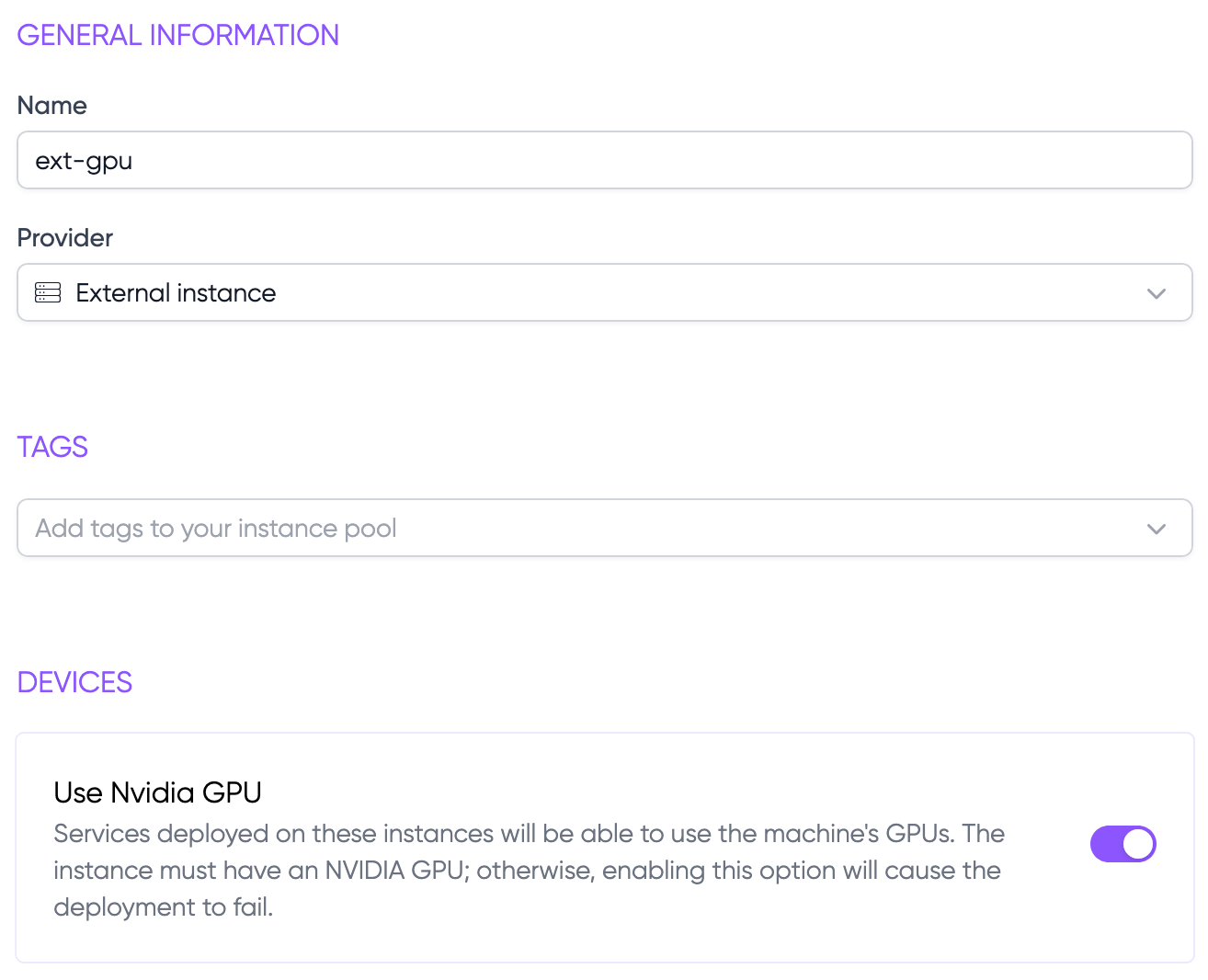

- Attach an External GPU Instance

You can attach any external machine (VM, server, or computer) running Ubuntu that has an NVIDIA GPU. When attaching the instance to LayerOps, you will need to specify that it has NVIDIA GPU capabilities.

Make sure NVIDIA drivers are properly installed on the machine before attaching it to LayerOps.

apt install ubuntu-drivers-common && \

add-apt-repository ppa:graphics-drivers/ppa && \

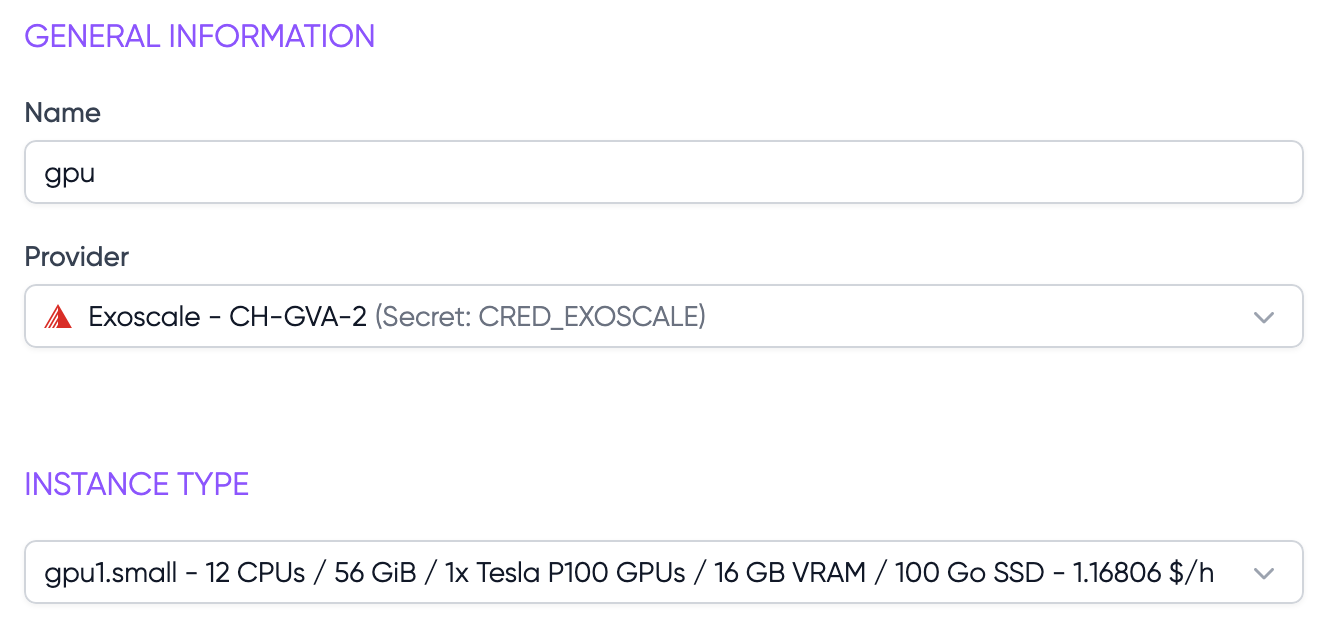

apt install $(nvidia-detector)- Deploy GPU-compatible Instances via Providers

You can create an instance pool with GPU capabilities through your configured cloud providers. Simply select a GPU-compatible instance type. This allows you to scale your GPU workloads automatically based on your needs.

Deploying Services with GPU Access

To enable GPU access for your service, follow these steps:

- Create a new service by clicking on the "Create new Service" button

- Fill out your service configuration form

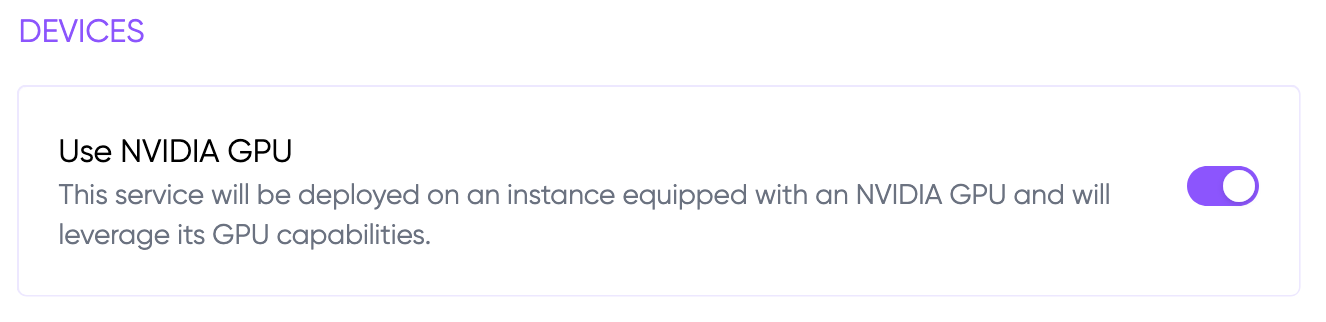

- In the "Something more?" section at the end of the form, click on the "Devices" button

- Check the "Use NVIDIA GPU" option to enable GPU capabilities for your service