Create service

This page assumes that you have already created and selected an environment.

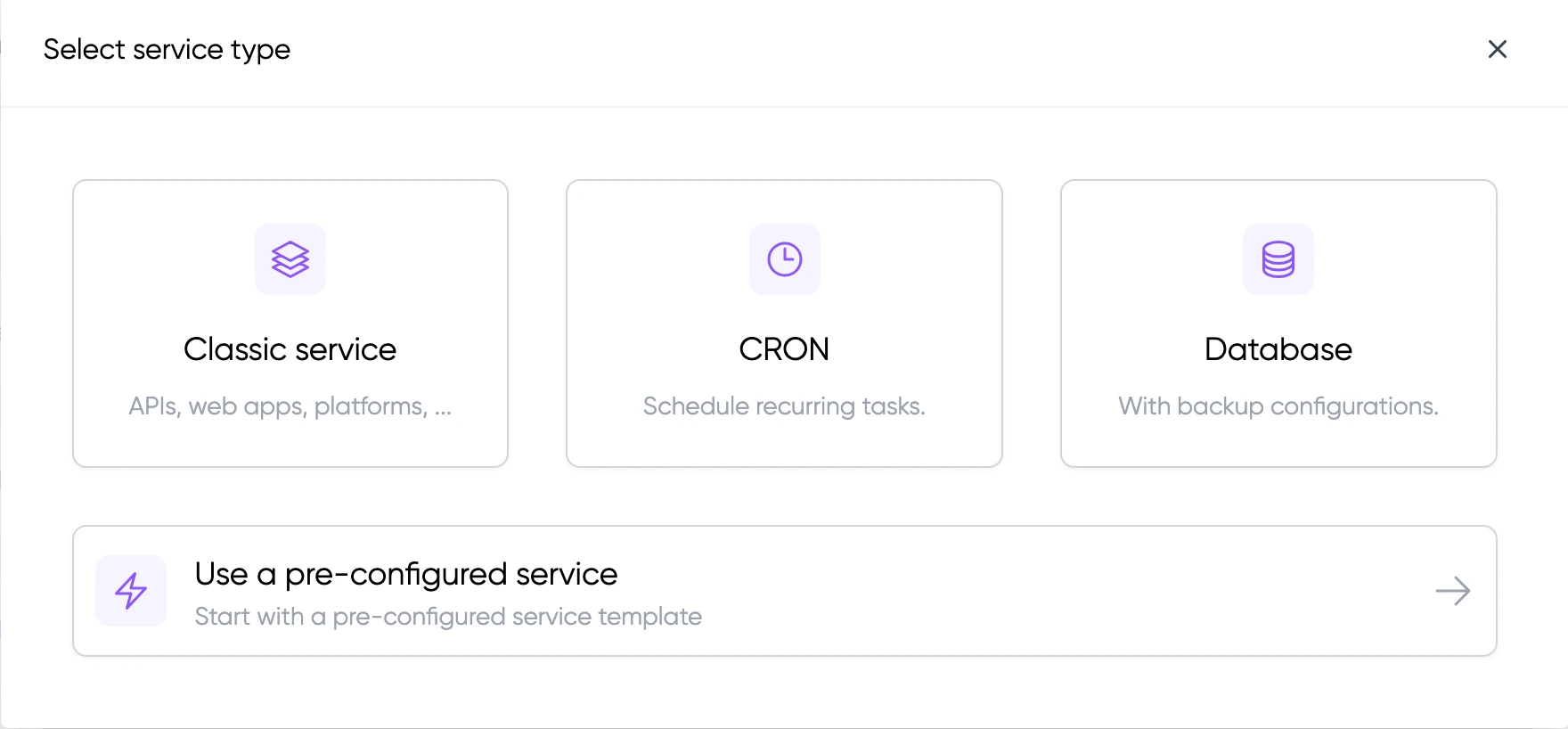

When creating a service, you can choose between:

- Classic service: For APIs, web apps, platforms, and other applications

- CRON: For scheduling recurring tasks

- Database: With backup configurations

- Pre-configured service: Start with a pre-configured template that can include one or multiple services with optimized settings

- If you choose a pre-configured service, some sections may be hidden as they are already configured in the template

- CRON services will show specific scheduling options

- Database services will display additional backup configuration options

- Classic services will show all standard configuration options

Docker configuration

You can choose to use a private registry (see how to add a private registry) or the default registry (https://hub.docker.com/).

Ports

Services can listen on specific ports, and you have the option to set up load balancer rules to make them public or keep them strictly private within your environment, accessible only to other services.

To add one or more ports, click on the "Add port" button. You can now define the port on which your service listens.

If you want your service to be accessible from the internet, check the "Add load balancer rule" option.

If you have already defined custom domains, you can enter them here to make this service and port accessible at this address.

This rule can be edited or added later from load balancer routing rules.

Health check

Health checks help LayerOps determine when a service is available to receive traffic. While health checks are optional, they play a crucial role in ensuring the availability and reliability of your services.

Resource requirements

By defining the necessary resources (CPU and RAM) for your service, you enable us to efficiently allocate and distribute your services across your instances, ensuring optimal performance and functionality.

Later, if you enable autoscaling, we will use this information to increase or decrease the number of copies of this service according to its consumption.

Quantity (and autoscaling)

In the "quantity" section, you can fine-tune the deployment of your services. You can opt to specify a fixed quantity of services to deploy, or you can enable autoscaling for dynamic adjustments based on your service's demand.

With autoscaling, you can set both minimum and maximum service quantities. This allows you to maintain control over your resources while ensuring that your services can adapt to fluctuating workloads.

Additionally, you can configure autoscaling rules based on CPU and RAM utilization, determined as a percentage of the values you specified in "Resource Requirements".

Deployment constraints

This option can be added once all the required steps have been completed. You will find this option under "Something more?".

Within the "Deployment Constraints" section, you can configure where your service is deployed.

- You can choose one or more tags. By defining a tag, your service will be deployed on an instance pool configured with that tag.

- You can choose a specific provider, which is useful when you need to specify a particular region or cloud infrastructure.

- You can select a particular instance pool, ensuring your service runs on one of the instances within that pool.

You can also provide several constraints.

Link to other services

This option can be added once all the required steps have been completed. You will find this option under "Something more?".

The "Links to other services" feature allows communication between services within your environment. For each link, you need to specify:

- The target service ID (which can be a service that doesn't exist yet)

- The port number on which you want to communicate with that service

Once you've set up the service relationship, you can specify which environment variables LayerOps should inject with the relevant information about this related service. Here are the variables that LayerOps will inject:

- Host: Information about the host of the related service. For instance, this might be an IP address like 127.0.0.1.

- Port: The port of the related service, like 80.

- Address: The complete address of the related service, like 127.0.0.1:80.

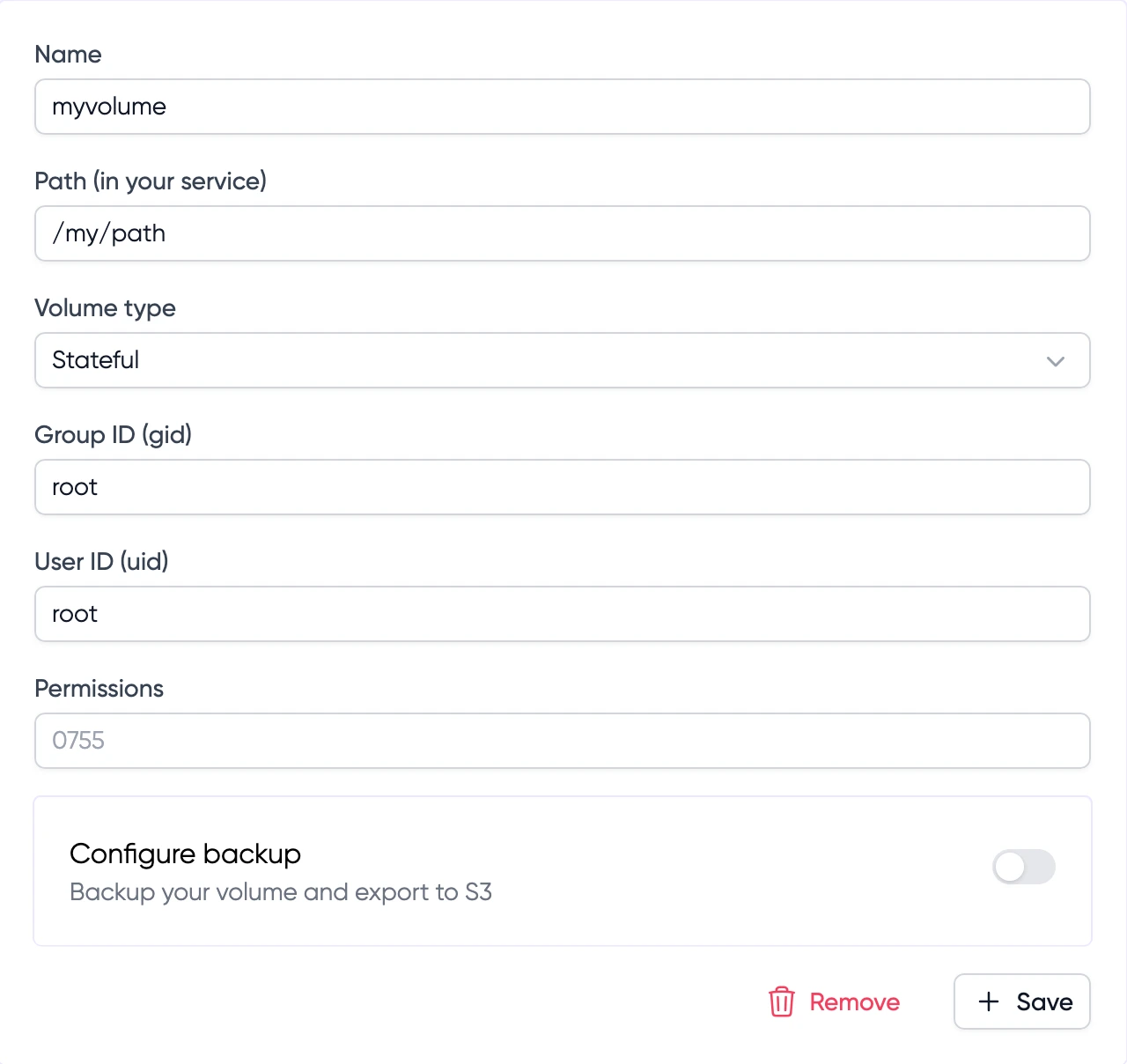

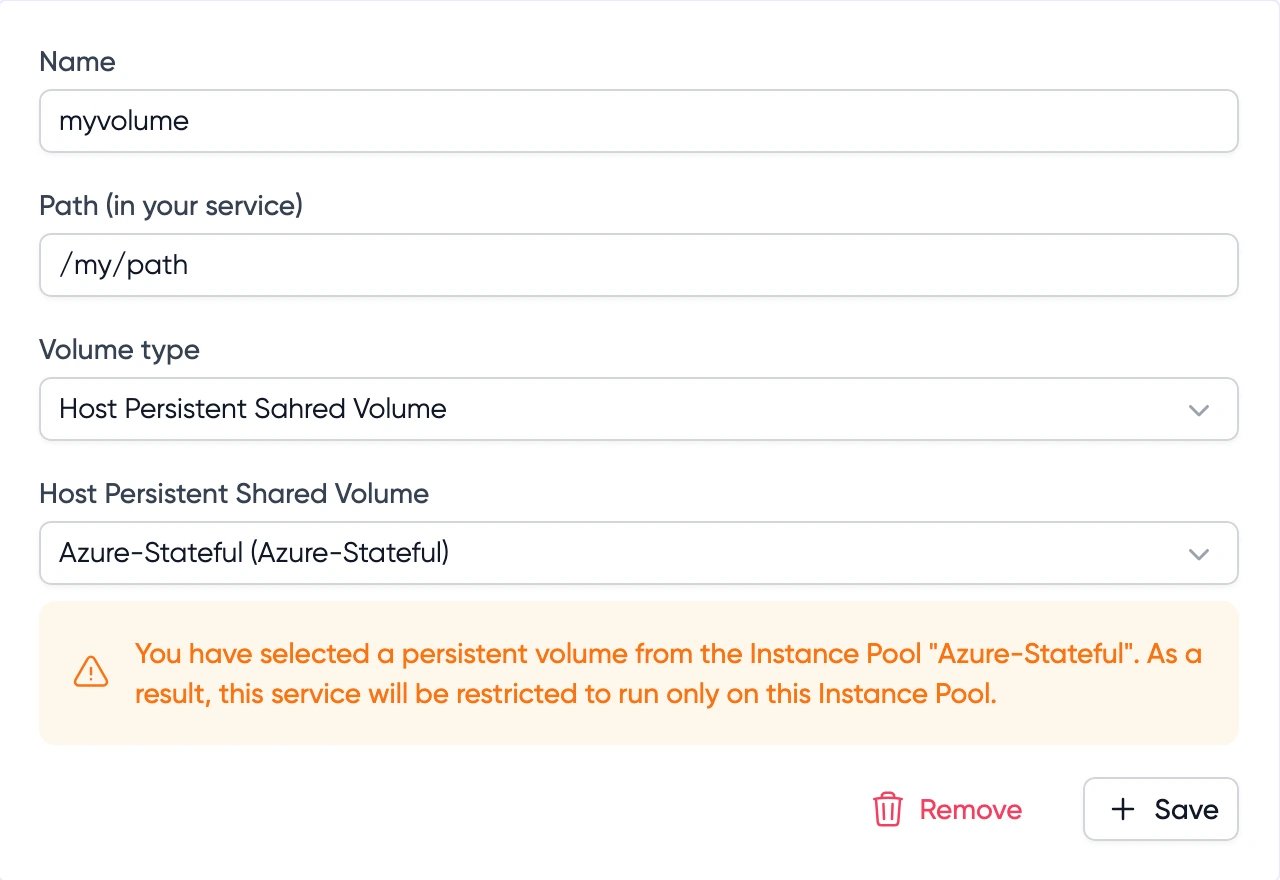

Volumes

This option can be added once all the required steps have been completed. You will find this option under "Something more?".

LayerOps offers three types of volumes:

Stateful Volume

A stateful volume creates and stores data on the instance where the service is deployed. This volume is:

- Exclusive to the service it's attached to

- Not shared with other services

- Persisted across service restarts on the same instance

For stateful volumes, you can configure automated backups to any S3-compatible storage. See backups for more information.

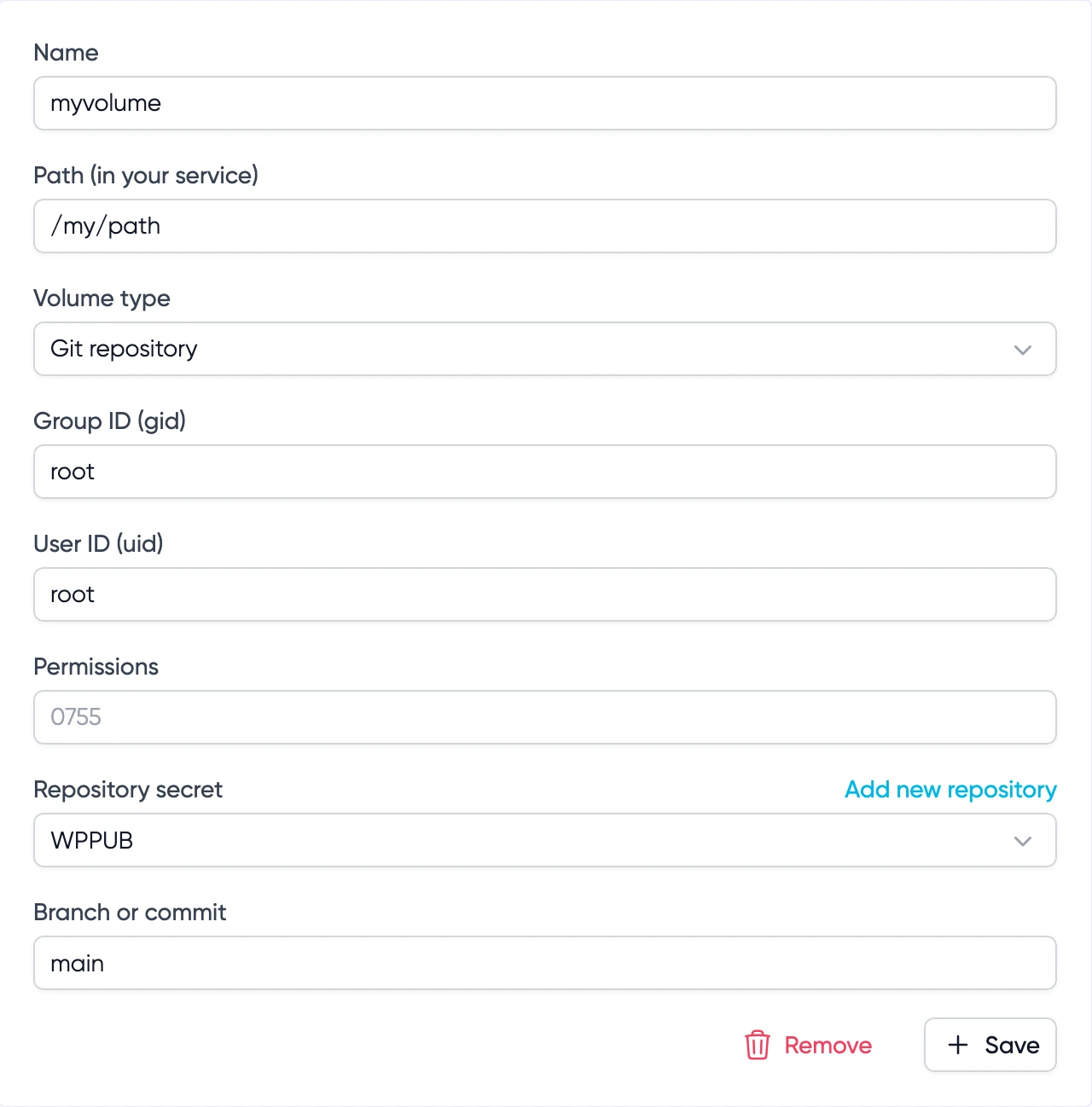

Git Repository Volume

This volume type automatically pulls content from a Git repository. To update the content, you can manually trigger a "reload" at the service level.

Host Persistent Shared Volume

This volume type links to an instance pool's volume, which means:

- The service will be constrained to that specific instance pool

- The service cannot be deployed to other instance pools

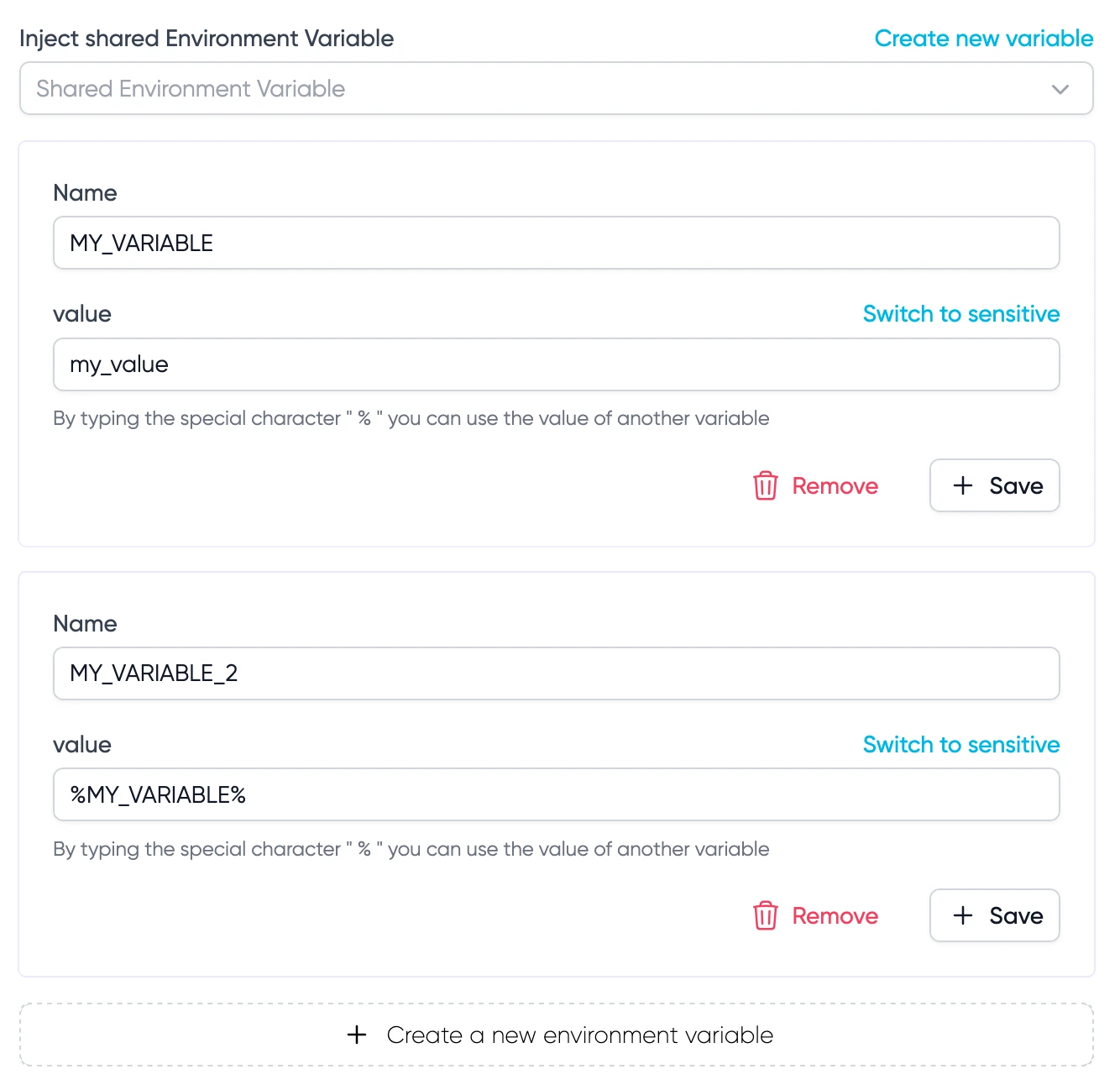

Environment variables

This option can be added once all the required steps have been completed. You will find this option under "Something more?".

You can configure environment variables for your service in two ways:

- Create new variables specific to this service

- Inject shared environment variables from your environment

Simply enter the name and value for each variable you want to create. You can mark sensitive values by clicking "Switch to sensitive" - these values will be encrypted and hidden from view.

% syntax, you can reference the value of another variable. For example, if you have a variable named MY_VARIABLE with value my_value, you can use %MY_VARIABLE% in another variable's value to inject my_value.CRON

This option can be added once all the required steps have been completed for service type "CRON". You will find this option under "Something more?".

Additionally, services can have a CRON configuration, enabling you to specify when a service should be started.

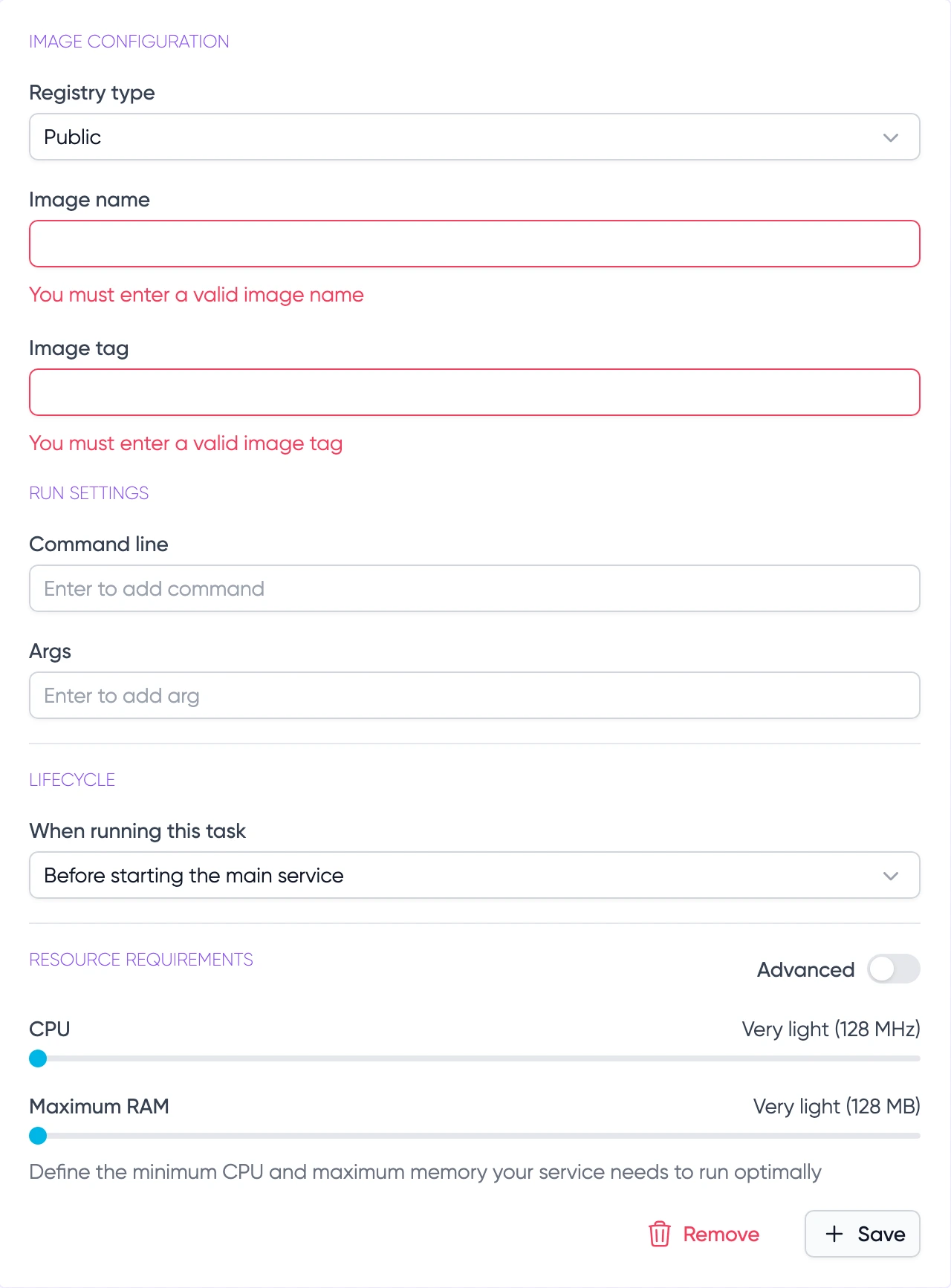

Side task

This option can be added once all the required steps have been completed. You will find this option under "Something more?".

Side tasks are additional services that run alongside your main service. You can configure zero or multiple side tasks, each with its own configuration.

Run Settings

You can configure when the side task should run:

- Before starting the main service: The side task must complete before the main service starts

- Along with the main service: The side task runs in parallel with the main service

- Run on the same instance as the main service

- Share the same environment variables as the main service

- Can be used for initialization tasks, health checks, or supporting processes

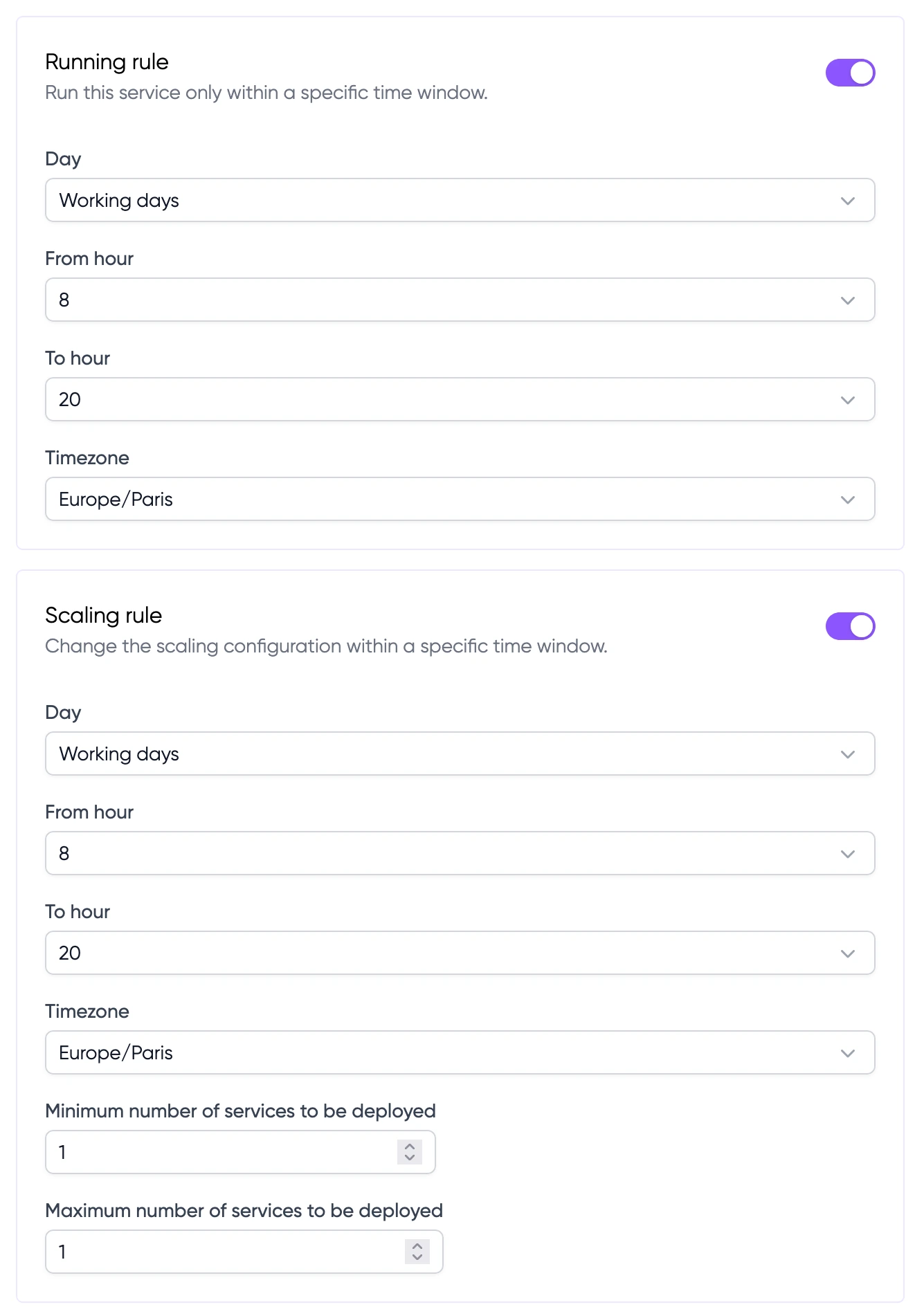

Schedule

This option can be added once all the required steps have been completed. You will find this option under "Something more?".

Furthermore, services can be scheduled to start and stop at specific times, and you can establish plans for changing scaling rules.

Read more about schedule configuration.

Read more about schedule configuration.

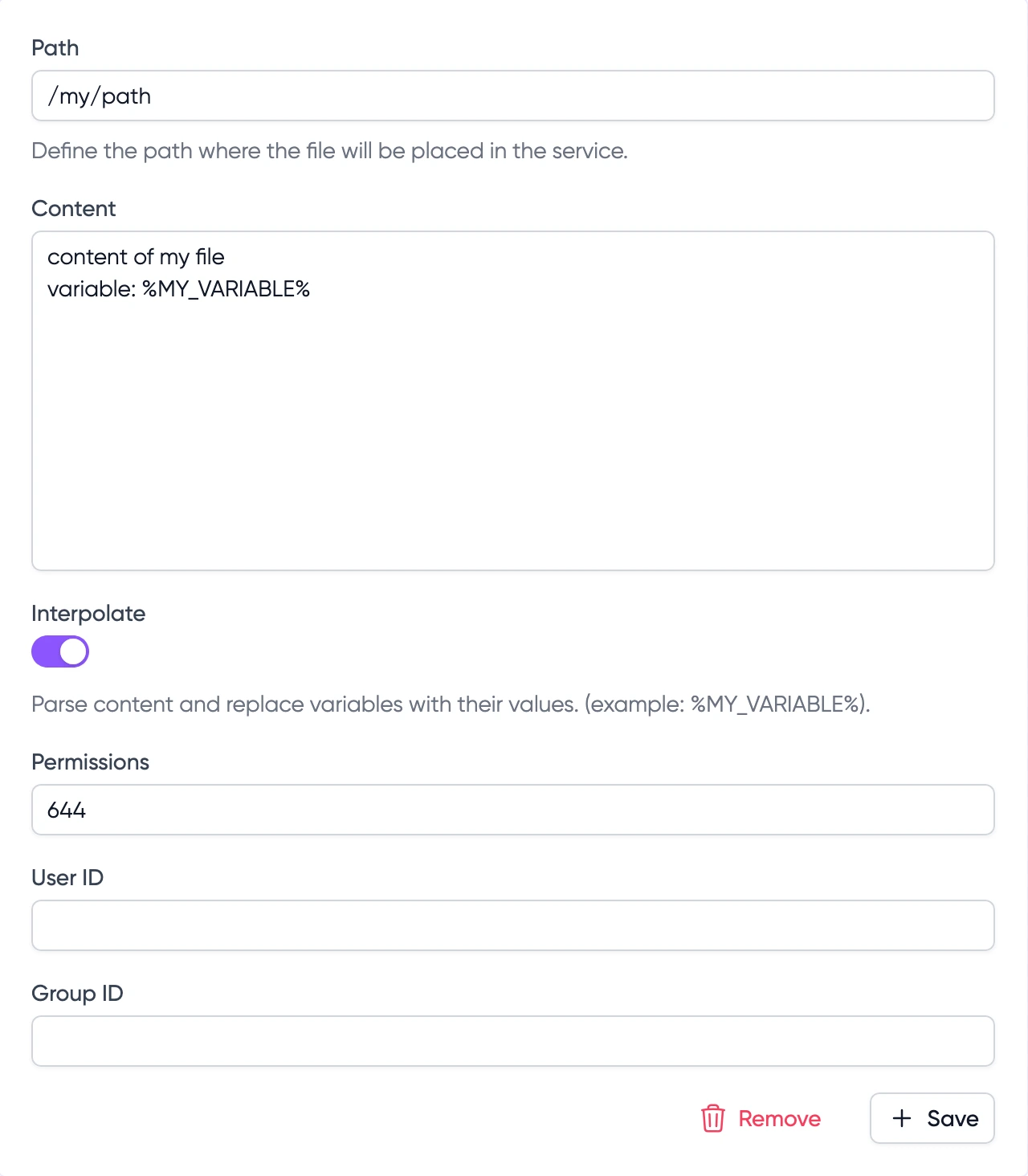

File

This option can be added once all the required steps have been completed. You will find this option under "Something more?".

The File feature allows you to create configuration files that will be placed inside your service container. For each file, you can specify:

- Path: The location where the file will be placed inside the container

- Content: The content of the file

- Permissions: File permissions in Unix format (e.g., 644)

- User ID and Group ID: Optional ownership settings

Variable Interpolation

When the "Interpolate" option is enabled, LayerOps will parse the file content and replace variables with their actual values. You can reference:

- Environment variables

- Injected shared environment variables

- Variables from linked services

Use the syntax %VARIABLE_NAME% to reference any variable. For example:

database_host: %MYSQL_HOST%

database_port: %MYSQL_PORT%

api_key: %API_KEY%